Classification, Curiosity, and the Evolution of Understanding

This morning, coffee in hand, I found myself reading a paper about large language models developing metalinguistic abilities—the capacity not just to use language, but to analyze and reason about its structure. The authors tested whether GPT-4 and OpenAI's new o1 model could perform tasks that linguists do: draw syntactic trees, identify recursive structures, recognize phonological patterns in artificial languages. The results were striking. While earlier models struggled, o1's chain-of-thought reasoning enabled it to succeed on complex analytical tasks, even generating correct analyses of rare structures like center-embedded clauses that are virtually absent from natural discourse.

What caught my attention wasn't just the technical achievement, though that's impressive enough. It was the fundamental question the paper illuminates: can these systems move beyond pattern recognition to something approaching genuine understanding? Can they not just classify, but reason about classification itself?

I've spent my life thinking about classification. From the mundane—organizing sensors and equipment in my home laboratory—to the profound: understanding biological evolution, ecological dynamics, even cosmic origins. Classification is how we make sense of complexity. It's how we recognize boundaries, build hierarchies, decide what features are essential versus incidental. Whether you're deciding if a soil moisture reading represents an anomaly or a pattern shift, whether two populations constitute distinct species, or whether a celestial structure reveals something fundamental about the universe's architecture—it's the same cognitive operation playing out at different scales.

This fascination is in my genes, I suppose. I'm the son of an electrical engineer who encouraged me to build gadgets as much as I loved being outdoors collecting plants and animals to study in my bedroom terrarium. I never had to choose between the soldering iron and the natural world—they were always inseparable aspects of the same curiosity. My father gave me the skills to build what I imagined, which meant I was never limited to asking only questions that existing tools could answer.

That dual inheritance shaped everything that followed. Three decades leading UC field stations, pioneering embedded sensor networks before they were commonplace, integrating robotics into ecological research—it was all driven by the recognition that observation and instrumentation are not separate endeavors but coupled aspects of discovery. You build tools to satisfy curiosity, and the tools reveal phenomena that generate new curiosity, requiring new tools. The boy with the bedroom terrarium became the director orchestrating drone swarms and autonomous water samplers across mountain reserves, but the fundamental impulse remained constant.

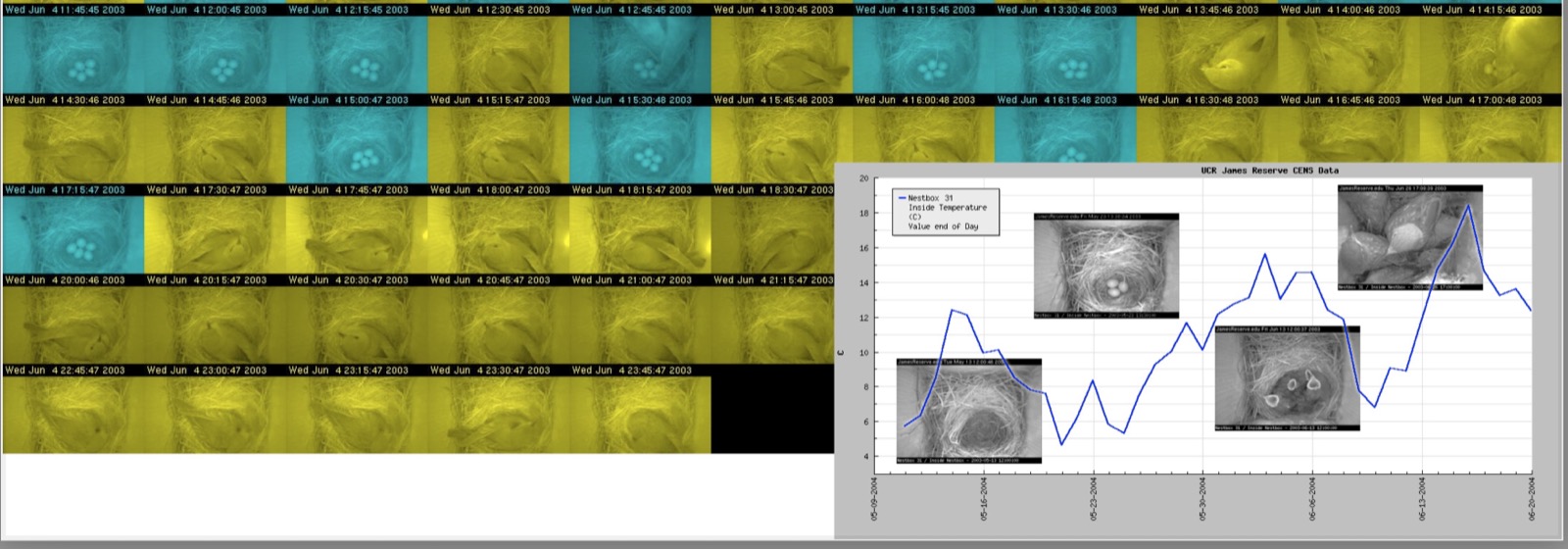

One of our most important discoveries came from exactly this approach. Working with the NSF's Center for Embedded Networked Sensing, we deployed continuous environmental monitors and nest cameras to study bird reproduction. What we found was something traditional field methods—periodic nest checks and weather station averages—could never capture: the precise environmental thresholds where nesting success collapses into failure.

The pattern was devastating in its clarity. Prolonged warming periods triggered ancestral cues—time to nest—but increasingly volatile climate meant those cues were no longer reliable. When freezing weather followed unseasonable warmth, we documented catastrophic failures. We weren't just observing correlation; we were capturing the dynamic interaction between environmental stress and biological resilience at the moment of threshold crossing. It was a window into how climate change doesn't just shift conditions—it decouples coevolutionary relationships that took millennia to establish.

These perturbations aren't rare anomalies. They're the new baseline. As warming accelerates, phenological mismatches proliferate: spring insect emergence no longer synchronized with songbird nestling food demands, plant flowering out of sync with pollinator life cycles, predator-prey relationships where one species shifts range or timing faster than the other. Historical ecological partners find themselves displaced in both space and time. Suitable habitat shifts poleward and upslope, but species can't necessarily follow. Coevolved communities disassemble. The question isn't whether ecosystems will change—it's whether they can reorganize fast enough to maintain function, or whether we're witnessing fundamental unraveling.

Understanding these dynamics requires capturing interactions across scales—spatial, temporal, biological, physical. That was always the goal of our sensor network research: not just collecting more data points, but revealing the coupled processes that drive system behavior. Yet here's the tragedy that haunts me: those data streams are effectively lost. Eleven years after the CENS project ended, with no one managing data curation, decades of continuous environmental monitoring has largely evaporated—decomposing on obsolete storage systems, inaccessible, unanalyzed.

And the cruel irony is that we now have the analytical tools—machine learning for pattern detection, advanced statistical methods for multivariate time series, computational power to process years of high-frequency data—but the observations themselves are gone. How many threshold crossings are buried in that lost data? How many early warning signals of climate-driven disruption would be obvious now in retrospect? The data was collected when continuous monitoring was revolutionary, when the instrumentation itself was the research frontier. Now we have the analytical sophistication to extract insights we couldn't have recognized at the time, but the raw material has vanished.

This loss taught me something fundamental about the structure of scientific inquiry. Institutional projects end. Funding cycles turn over. Personnel move on. But ecological and evolutionary processes unfold over decades and centuries. The real scientific value in environmental monitoring accrues over timescales that institutional frameworks aren't designed to support. You need persistence and commitment that transcend grant periods and career transitions.

Which brings me back to where I began—with curiosity about whether artificial intelligence can develop genuine understanding, and with my own response to that lost archive of observations. For the past several years, I've been building what I call the Macroscope: a personal research infrastructure for continuous, coupled observation across four domains—EARTH (abiotic conditions), LIFE (biological phenomena), HOME (built habitat) and SELF (personal metrics and cognitive state). It's deliberately designed for permanence and evolution, hosted on servers I control in my home laboratory, structured to grow with my questions rather than conforming to external mandates or funding cycles.

The Macroscope represents a different paradigm. Not institutional big science with its institutional vulnerabilities, but individual inquiry with infrastructure scaled to personal capability. It's a lifetime research program, continuous and evolving, designed to survive my curiosity rather than serve someone else's priorities. The boy building sensors with his father's help has become the retired field station director running a LAMP stack on gigabit fiber, orchestrating distributed observation networks that integrate everything from weather stations to phenology cameras to personal health monitoring.

I'm 71 years old now, and I harbor no illusions about permanence. The Macroscope will likely die with me unless the paradigm catches on with others. That's the productization challenge: what we've built together—the database schemas, the API integrations, the sensor protocols—requires comfort with LAMP stacks and a certain fluency with technical infrastructure. The barrier to entry is high. To make this truly adoptable would require simplified deployment packages, standardized sensor protocols, pre-built interface components, documentation aimed at naturalists rather than programmers. That's a massive engineering effort on top of the science I actually want to do.

So perhaps the legacy isn't about thousands of people running Macroscope clones. Perhaps it's about the paradigm itself—the idea that personal-scale distributed sensing can constitute legitimate scientific inquiry, that an individual with modern tools can maintain lifetime observation programs that transcend institutional constraints, that curiosity-driven exploration at human scale can reveal phenomena that institutional big science misses precisely because it lacks the temporal continuity and personal investment that individual programs can sustain.

Which loops back to those large language models developing metalinguistic abilities. These systems are learning to recognize patterns in patterns, to reason about structure rather than just exemplify it, to move from classification to meta-classification. But they lack something fundamental: curiosity. They don't wonder "what if?" and then build tools to investigate. They can analyze, but they don't discover. They respond to prompts but generate none of their own.

Human inquiry—my inquiry—is different. It's driven by intrinsic fascination with how things work, how systems interact, what patterns emerge when you observe patiently and systematically over time. The drive to understand complex interactions, to chart future trajectories through actual comprehension rather than statistical extrapolation, to build instruments that extend observation into domains previously inaccessible—that's distinctly biological, distinctly human, distinctly personal.

The terrarium in my childhood bedroom and the sensor networks spanning my adult career are expressions of the same impulse: the need to observe, to classify, to understand the relationships between things. Classification systems are how we organize knowledge, but understanding emerges from recognizing how classifications interact, how boundaries shift, how hierarchies reveal deeper patterns when examined across scales.

That paper I read this morning about metalinguistic abilities in AI systems? It's remarkable because it shows machines beginning to reason about reasoning itself. But I keep returning to the question it implicitly raises: what is understanding, really? Is it pattern recognition at sufficient scale and sophistication? Or is it something irreducibly biological—the product of embodied curiosity, mortality, the need to make sense of a world we're embedded in rather than trained on?

I don't know the answer. But I know that my drive to understand—to build tools that reveal interactions, to maintain observations over decades, to integrate data across domains and scales—emerges from being alive in a world I find endlessly fascinating and frustratingly opaque. The Macroscope is my instrument for that lifetime inquiry. Whether it outlives me is almost beside the point. What matters is that it exists now, capturing relationships between environmental conditions and biological responses, between external phenomena and internal experience, between the scales we can measure and the patterns they reveal.

The art of classification—from bedroom terrariums to cosmic structure—is ultimately about recognizing connections. Not just sorting things into categories, but understanding how categories relate, how boundaries blur, how complexity emerges from interaction. That's what draws me to watch AI systems develop analytical capabilities. That's what drives me to maintain sensor networks tracking environmental thresholds. That's what motivates this entire research program.

Understanding the complex interactions between objects and environments, charting future trajectories through actual comprehension of coupled dynamics—that remains the central goal. Whether pursued with soldering irons and childhood curiosity, with embedded sensors across mountain reserves, or with personal infrastructure built for lifetime inquiry, it's always been about the same thing: making sense of how the world works, one observation, one classification, one discovered relationship at a time.

References

- - Beguš, G., Dabkowski, M., & Rhodes, R. (2025). Large linguistic models: Investigating LLMs' metalinguistic abilities. IEEE Transactions on Artificial Intelligence. DOI: 10.1109/TAI.2025.3575745