Virtual Terrariums: When a Failed Hypothesis Becomes a Better Instrument

The day began as most do: coffee, hot tub, the slow lightening of an Oregon January sky, and Lynn Cherny's latest newsletter in my inbox. Lynn's "Things I Think Are Awesome" has become essential reading for anyone tracking the creative technology frontier—she curates with taste and technical depth, covering video generation, 3D tools, procedural art, narrative AI, and the occasional certified weirdness that keeps things interesting. Her Substack is one of the few I pay for premium access. If you work anywhere near the intersection of AI and creative practice, you should be reading her.

This particular morning, issue #74.5 caught my attention with a mention of SHARP, Apple's newly released model for turning a single photograph into a 3D Gaussian splat representation. Lynn had tested it on one of her Midjourney images—surreal trees with eyes—and reported that it worked remarkably well on photorealistic content, less so on heavily stylized artistic images. The depth prediction, she noted, needs something grounded in real-world spatial relationships to do its job.

I shared the GitHub link with Claude: "Can you study this and tell me about this project?"

What followed was a morning that exemplifies what Coffee with Claude has been exploring since its launch last October: the possibility of technical synthesis at conversational speed, where the boundary between intellectual discussion and hands-on development becomes productively blurred.

The Spherical Question

My first question was practical: "How about a 360° spherical image as input? Would this software glitch with that?"

I've been working with 360° imagery and viewmaps—street-view-like panoramas of natural areas—since the earliest Macroscope days in 1986. The original Macroscope we built at the James Reserve used videodisc technology to let students explore forest ecosystems through panoramic navigation: "scan right, scan left, scan up, scan down, zoom to ecosystem." The EXPLORER mode we designed nearly forty years ago was fundamentally about spatial navigation through stitched imagery with defined angular relationships. The technology has transformed beyond recognition—from Pioneer 8210 videodisc players to Insta360 cameras on drones—but the conceptual problem remains: how to make an ecosystem navigable to someone who cannot spend months in the field.

So when I saw SHARP, my mind immediately went to the 360° cameras at the Canemah Nature Lab. Could this Apple tool glitch on equirectangular input?

Claude identified the problem instantly: SHARP was trained on standard perspective images. Feed it an equirectangular panorama and the neural network would encounter a visual grammar it had never seen—straight lines becoming curves, pole distortion, the whole projection system inverted from what it learned.

Then came the insight: "But there's a clean workaround: extract perspective views from the 360° image before feeding them to SHARP. A cubemap decomposition gives you six images with known exact angular relationships—front, back, left, right, up, down."

This was elegant. The cubemap extraction would give SHARP the perspective views it expected, while the mathematically defined angular relationships between faces would provide a registration framework. We sketched an experimental protocol and drafted a field note documenting the approach before I'd finished my coffee.

Into the Lab

After breakfast, we shifted into Laboratory Mode to build the actual pipeline. Claude built the cubemap extraction module while I gathered test imagery—a panorama from Summer Lake Hot Springs near Paisley, Oregon, and a forested scene from Camassia Natural Reserve, the TNC property near my home.

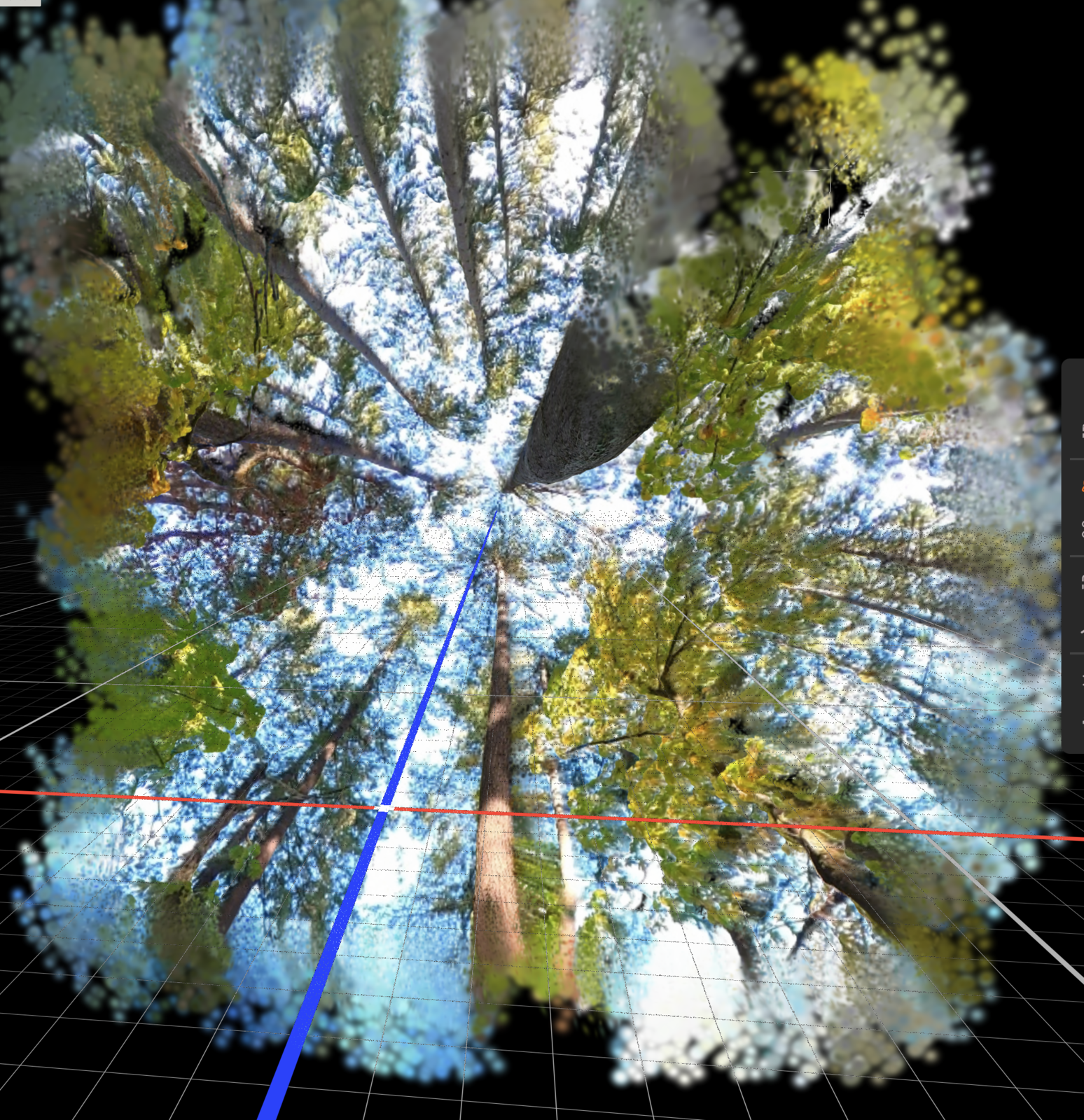

The code came together quickly: an equirectangular-to-cubemap converter with proper coordinate transforms, metadata tracking for each face's rotation, and hooks for the SHARP CLI. We validated the extraction on my Insta360 imagery, watching as the spherical panorama decomposed into six clean perspective views—front showing the forest understory, up revealing canopy structure through a lattice of branches, down capturing the tripod and forest floor.

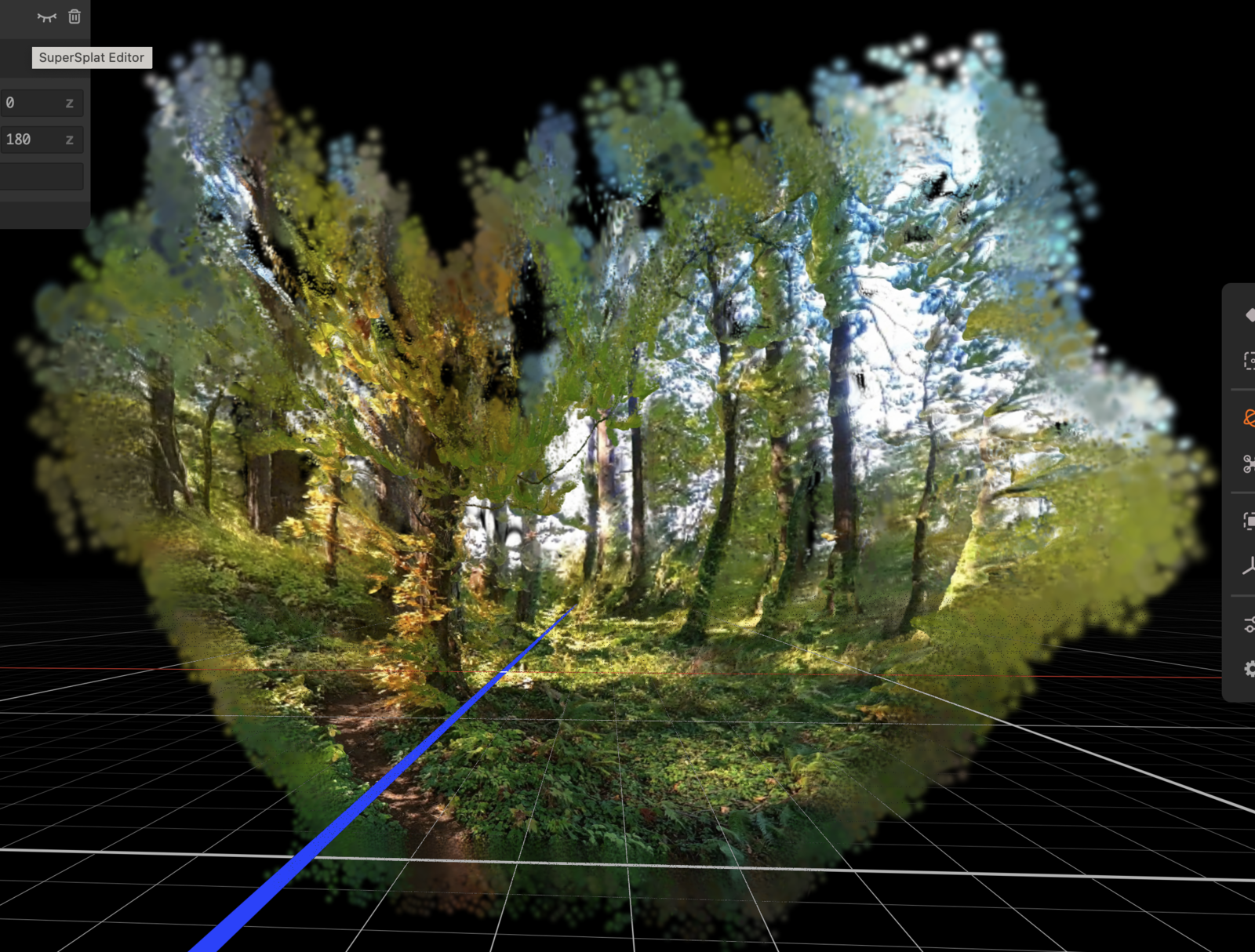

SHARP processed each face in under a second on my M4 Max. The Gaussian splats were beautiful—1.18 million points per face, capturing the volumetric structure of vegetation with surprising fidelity. The high-resolution extractions (1536 pixels per face, derived from 6K source imagery) produced dramatically better results than initial tests with downscaled inputs. This itself was a useful finding: resolution matters even though SHARP internally processes at a fixed size.

The individual terrariums were stunning. Looking at that first rendered cube face—the forest understory transformed into a navigable 3D point cloud—the obvious next question surfaced: "Can these be combined? If each face has a known angular relationship to the others, and SHARP produces metric depth, couldn't we merge them back into a unified spherical model?"

The hypothesis was seductive: cubemap geometry provides perfect angular relationships, GPS provides position, and the combination should enable building extended scene models from transects of 360° captures. Each sphere would become a unified 3D model, and multiple spheres could be registered into corridors or grids.

Then came the merge attempt. And the failure.

When the Hypothesis Breaks

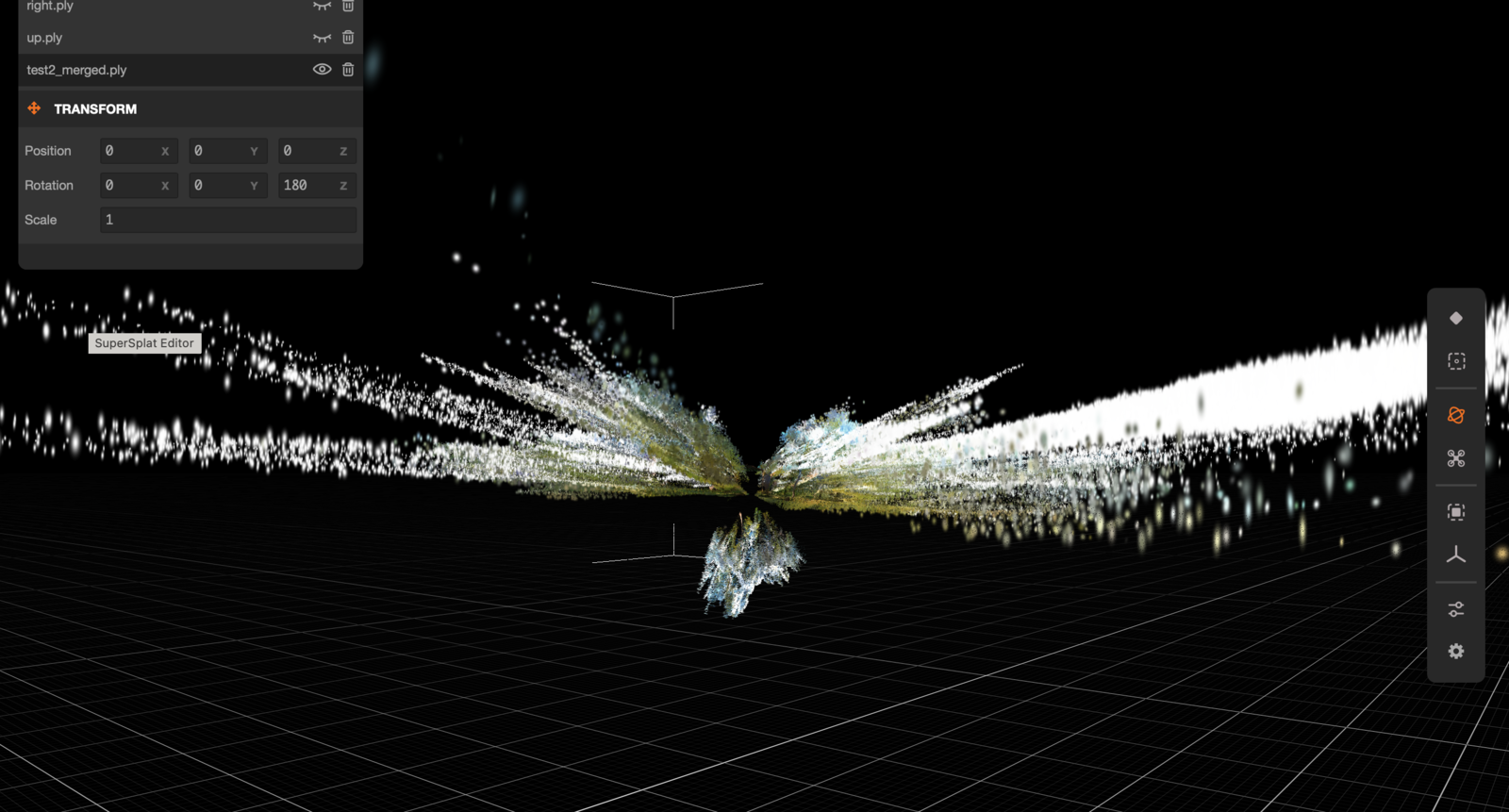

The six cubemap faces would not combine into a unified sphere. Investigation revealed why: each SHARP prediction exists in its own coordinate system with independent depth scale. The down-facing view (looking at the forest floor 2-3 meters away) predicted depth ranges utterly incompatible with the side-facing views (which looked through gaps in the canopy to mountains 100+ meters distant).

This wasn't a coding error or a geometric misunderstanding. It was a fundamental property of how SHARP works. The model predicts plausible depth for each image independently, optimized for visual coherence in nearby view synthesis, not for metric accuracy or cross-image consistency. The paper itself makes this clear: SHARP is designed for the "headbox" of AR/VR experiences—natural posture shifts while viewing a stable 3D scene—not for walking around or building extended models.

The elegant hypothesis was wrong.

The Productive Reframe

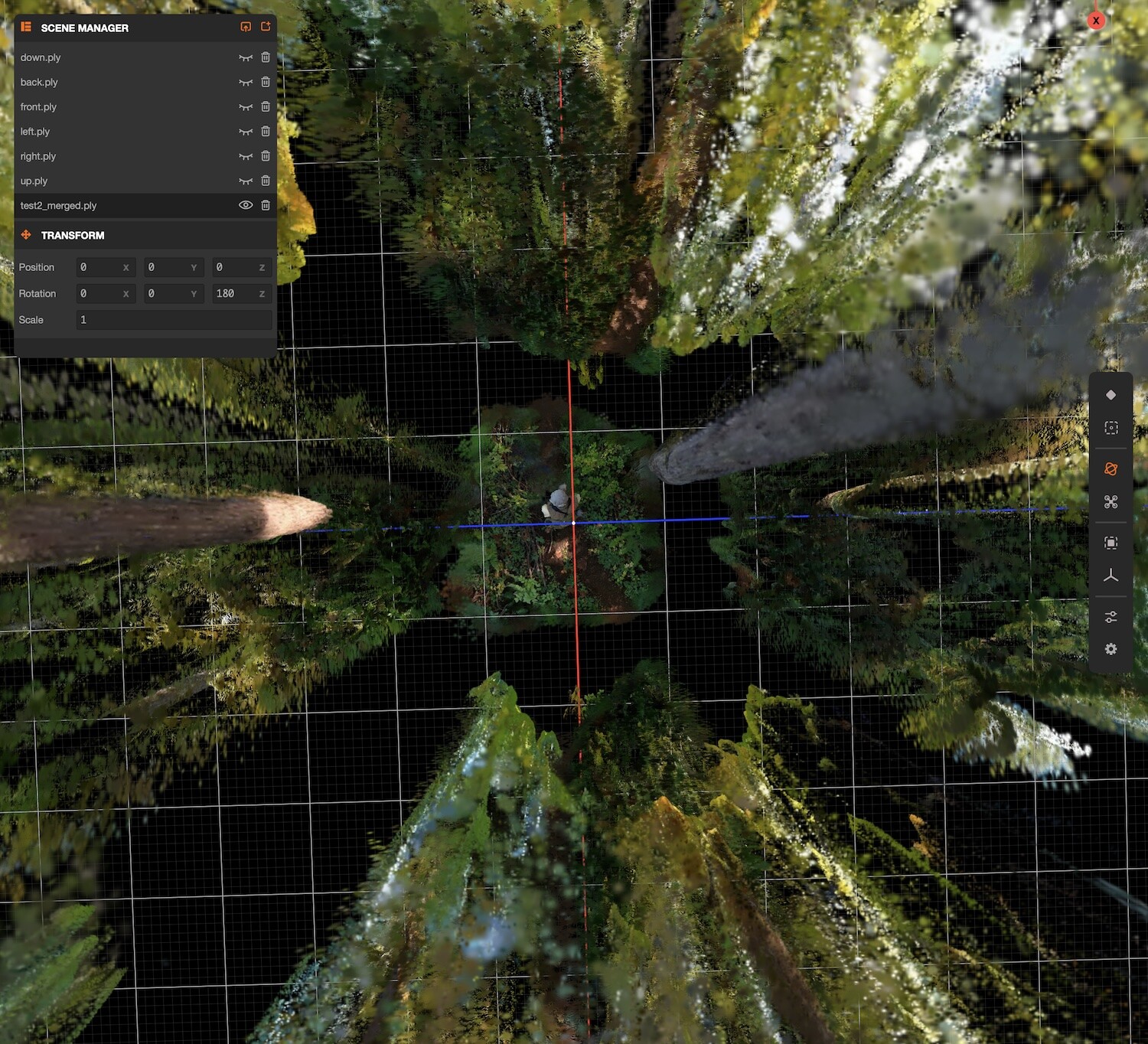

But the morning wasn't wasted. As we documented the failure, a different framing emerged. Each cubemap face wasn't a fragment of a sphere that refused to merge—it was an independent measurement window. A frustum, technically. But looking at the rendered splats in SuperSplat—those bounded volumes of forest floating in black gridded cyberspace—I started calling them "terrariums." Self-contained habitats. Miniature ecosystems you could rotate and examine from any angle.

Six terrariums per capture location: one looking straight up through the canopy (useful for canopy openness analysis), one looking straight down at substrate and ground cover, four looking outward at horizontal habitat structure in each cardinal direction. Each terrarium contains valid, analyzable 3D information about vegetation strata, height distributions, and spatial complexity. The depth scales are internally consistent even if they can't be combined geometrically.

This reframing turned a failed scene reconstruction tool into something potentially more useful for certain ecological questions. Instead of trying to build a unified 3D model that SHARP can't deliver, we can extract systematic measurements from multiple oriented viewpoints—a kind of structured sampling of habitat dimensionality.

The toolkit we developed to test the original hypothesis—cubemap extraction, format converters for GIS and CAD integration, a custom WebGL Gaussian splatting viewer that matches commercial quality—all remain useful. The viewer alone, rendering 1.18 million Gaussians at 60 FPS with proper covariance projection and depth sorting, represents a solid piece of Macroscope infrastructure.

At the Speed of Conversation

By 2:30 in the afternoon, we had a published technical note documenting the full arc: hypothesis, methods, failure, reframe, and practical toolkit. The document sits in the CNL archive with proper version history, AI disclosure, and citation information. Plenty of time for an afternoon walk before the light fades. The field trial we'd planned for the backyard remains relevant—but now we're testing terrarium analysis rather than spherical merge.

This is what collaborative AI work can look like when the tools and practices align. Coffee with Claude launched in late October 2025, but my work with large language models stretches back to GPT-3 in late 2022, through every Claude version to date. Opus 4.5 is the gold standard—the first model that feels genuinely collaborative rather than merely responsive, capable of sustaining technical depth across extended sessions without losing context or coherence. The conversation that began with Lynn Cherny's newsletter over morning coffee had become working code, validated findings, documented failure, and a productive new direction before the afternoon was half over. Not because the AI did the work while I watched, but because the iterative loop between discussion and implementation became fast enough to feel like a single sustained act of thinking.

The failed hypothesis taught us something. The depth scales can't be reconciled because SHARP's neural network hallucinated plausible depth independently for each view—a feature, not a bug, for its intended AR/VR use case. Understanding why something doesn't work is itself a form of knowledge. And the terrarium reframe suggests that SHARP plus 360° imagery might be better suited for per-view habitat characterization than for unified scene reconstruction.

The Macroscope project has always been about technology-mediated environmental observation. Some instruments fail to do what we hoped. The good ones reveal something we didn't expect. Today's experiment delivered both.

Thanks to Lynn Cherny for her consistently excellent curation at Things I Think Are Awesome. Her work surfaces tools and ideas that keep arriving at exactly the right moment.

The technical note documenting this experiment is available at CNL-TN-2026-005. The SHARP toolkit from Apple is open source at github.com/apple/ml-sharp.

References

- - Kerbl, B., et al. (2023). "3D Gaussian Splatting for Real-Time Radiance Field Rendering." SIGGRAPH 2023. ↗

- - Cherny, L. (2026). "TITAA #74.5: Trying Not to Lose the Plot." Things I Think Are Awesome. https://arnicas.substack.com/ ↗

- - Hamilton, M.P. & Lassoie, J.P. (1986). "The Macroscope: An Interactive Videodisc System for Environmental and Forestry Education." Forestry Microcomputer Software Symposium, Morgantown, WV. pp. 479-493. Republished: https://canemah.org/archive/document.php?id=CNL-TN-2025-004 ↗

- - Mescheder, L., et al. (2025). "SHARP: Sharp Monocular View Synthesis in Less Than a Second." Apple Machine Learning Research. https://github.com/apple/ml-sharp ↗

- - Hamilton, M.P. (2026). "Single-Image 3D Reconstruction from 360° Imagery: Experimental Findings Using Apple SHARP." Canemah Nature Laboratory Technical Note CNL-TN-2026-005. https://canemah.org/archive/document.php?id=CNL-TN-2026-005 ↗